Introduction

LogikHub leverages powerful AI models to generate documentation and answer your questions. You can customize which AI models LogikHub uses and how they behave to suit your specific needs. In this post, we’ll explain the available models, plus important settings like reasoning effort and temperature.

Understanding the Different AI Model Types

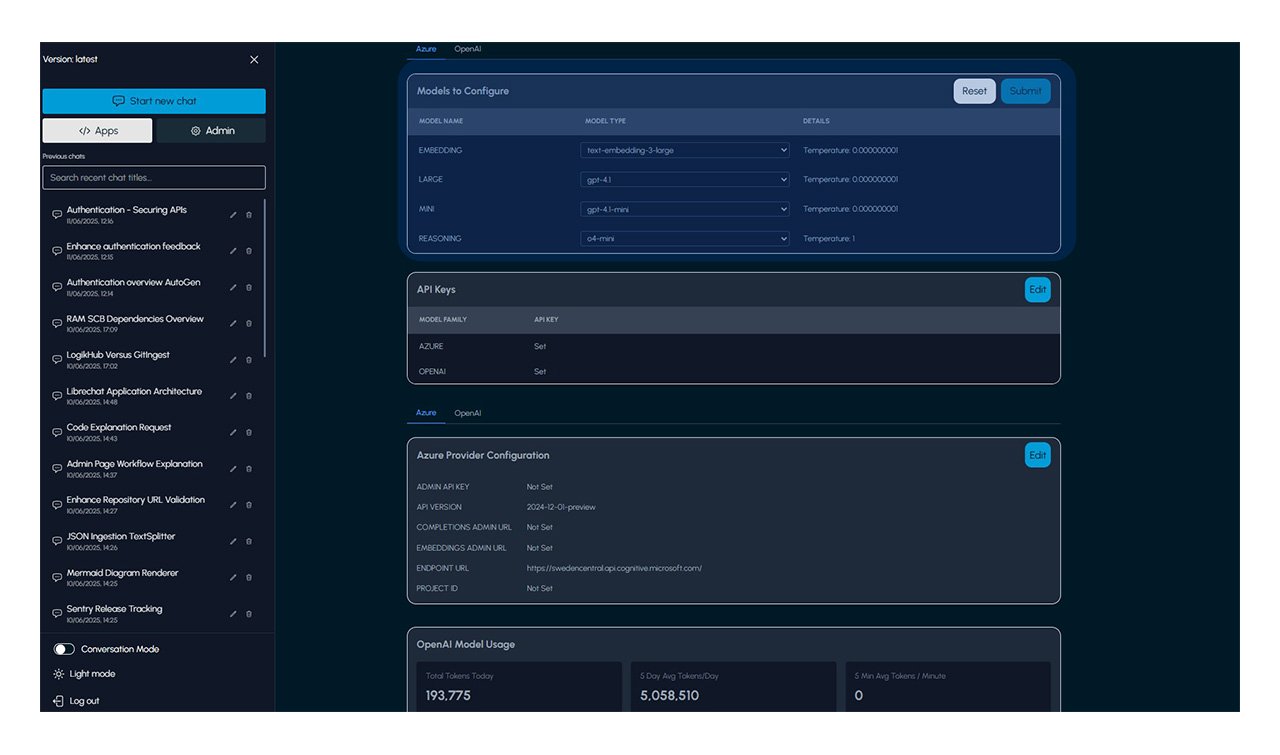

LogikHub supports both native OpenAI and Azure OpenAI models, allowing you to pick based on compliance, latency, or features. On the Admin page, you can switch between providers and select from four key model types:

- Mini: A lightweight model designed for speed and cost-efficiency. In LogikHub, it is used in combination with the Large model, depending on the complexity of user queries in “Conversation” mode.

- Large: A full-scale model optimized for complex, high-accuracy tasks. It is used for deeper understanding and more precise answers, essential for LogikHub’s “Conversation” mode chat replies.

- Reasoning: A specialized model that integrates conversation history with tool outputs to provide contextual, multi-step reasoning. This is used for advanced workflows requiring nuanced, context-aware responses and powers LogikHub’s “Reasoning” mode.

- Embedding: These models don’t generate answers directly but convert phrases into mathematical vector representations. This allows LogikHub to identify relevant documents to supply the other models, enabling more accurate and relevant answers.

What is ‘Reasoning Effort’?

Reasoning effort refers to the computational intensity and depth of AI analysis applied when generating responses. Increasing reasoning effort enables the AI to better connect information across your entire codebase, providing richer, more nuanced, and context-aware answers. However, higher reasoning effort requires more processing power and will increase response times.

What is ‘Temperature’?

Temperature controls the randomness or creativity in the AI’s responses.

- Lower values (e.g., 0.0–0.3) generate focused, predictable text—ideal for accurate and direct code explanations.

- Higher values (e.g., 0.7 and above) create more diverse and creative outputs—useful for brainstorming or exploratory writing.

LogikHub has presets for the models which give you the option to choose the most appropriate values for your tasks.

How to Change Models and Settings

It is easy to experiment with the different models. From LogikHub’s settings or admin panel, you can:

- Pick your model provider (OpenAI or Azure OpenAI)

- Select the model type that best fits your task

- Adjust both reasoning effort and temperature to balance accuracy, insight depth, and response style

- Experiment to find the setup that works best for your team’s workflow

Optimizing these configurations lets you harness LogikHub’s AI to best support your developer workflows and documentation needs.

Leave a Reply